Polarity? Phase? What is the difference?

By Rob Stewart - JustMastering.com

Bottom line:

- Beware of the name - there is a practical difference between phase and polarity in mixing and mastering

- Use each carefully, and check your mixes in mono while working

Most DAWs and mixing consoles include a "phase" switch, which is actually a “Polarity Reversal” switch. I don’t normally get hung up on terminology, but phase and polarity can help you in different ways when mixing and mastering.

Before I go further, I'll explain that for illustration purposes, the drawings in this article intend to depict pure sine waves but they are not precise representations. I'll also add that while the concepts I am discussing here are from the practical (i.e. recording, mixing and mastering) viewpoint, another viewpoint is mathematical. More on that later.

What is phase?

Phase, refers to a waveform’s position in degrees of a cycle, or time, relative to another waveform. A waveform’s phase is 0 until you move it forward or backward from its position. This principle is easiest to illustrate using two simple waveforms.

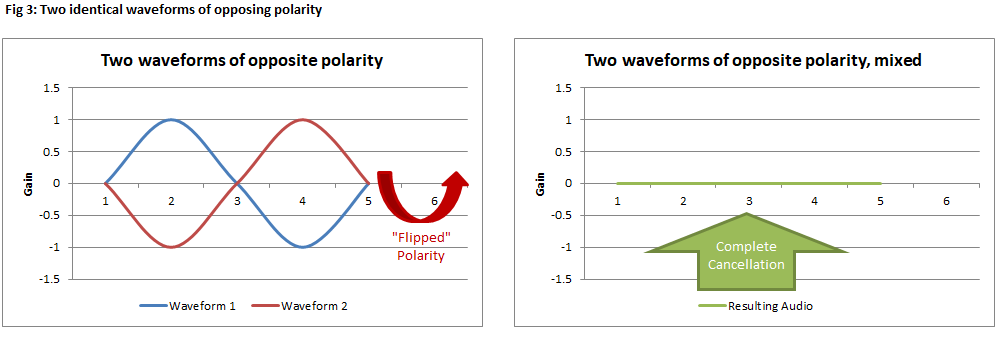

The two waveforms above left are similar except that waveform 2 is at lower gain. They are perfectly in phase with each other are both of positive polarity. Because they are also the same frequency, when you mix these two together (above, right), they reinforce each other perfectly. The end result is a waveform that is of higher amplitude (i.e. gain) than either waveform 1 or waveform 2 on their own.

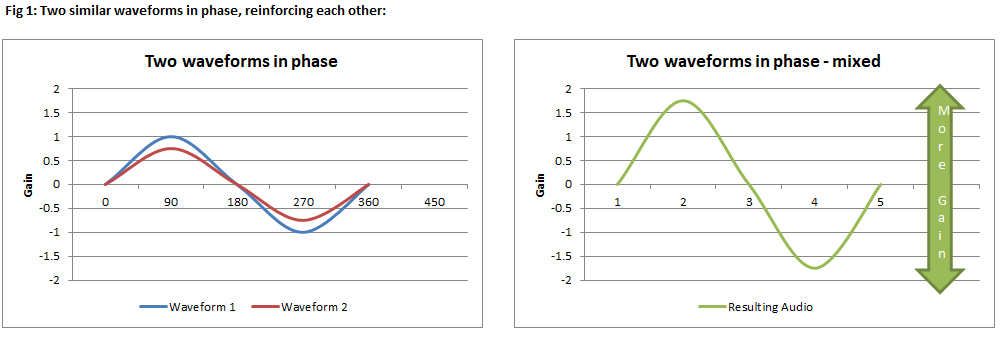

These next two waveforms are also identical, but this time waveform 2 has been phase-shifted 180 degrees (i.e. 1 half cycle forward) relative to waveform 1. If you were to mix these two together (below, right), they cancel each other out. When we are discussing phase, this state is known as “antiphase”.

Things are simple enough when looking at basic single-frequency waveforms like these. Sounds in nature such as musical instruments are much more complex, consisting of many dense layers of frequencies. Shifting the phase of certain frequencies within a complex waveform will change how they interact with other frequencies in the waveform in a complex way where some frequencies will be reinforced (therefore boosted in gain), others may cancel slightly (or almost completely in extreme cases). For better or worse, the result can sound unnatural but could be useful for problem solving or for artistic purposes. A good example of a popular use for phase is the phaser effect.

What is Polarity, then?

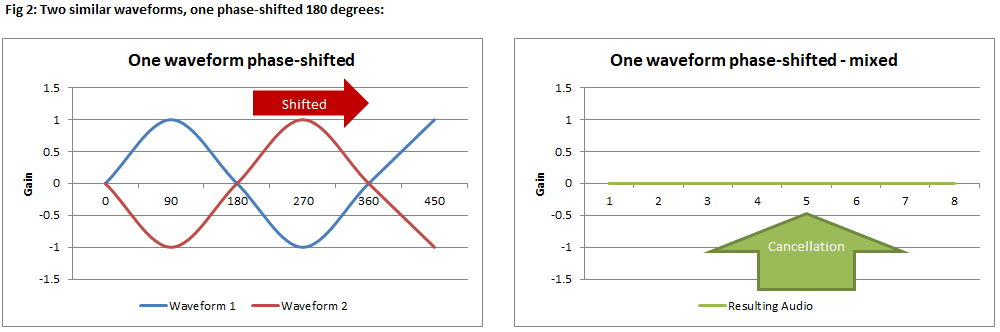

Polarity is either a positive or negative state, relative to the original waveform. A waveform is of positive polarity until you invert or "flip" the polarity to make it negative, relative to its original state. Since polarity can be flipped, you can control that with a switch. A negative polarity version of a waveform will be a mirror image of its positive polarity counterpart. For those who are science buffs, you might imagine positive versus negative polarity being almost like matter and antimatter. Assuming that everything about two waveforms is the same other than their polarity (time, amplitude, etc.), when you mix them together they cancel each other out and you are left with nothing, as illustrated below.

How are Figures 2 and 3 different?

The difference between the negative polarity depicted above, and the 180 degrees of phase shift depicted in Fig 2 is in how we got the waveforms to cancel each other. Remember that with phase, we are shifting the position of a waveform relative to another in time (degrees of cycles). With polarity, we are positioning the waveform as either right side up, or upside down relative to its original state. I.e. we are flipping the +/- orientation without changing the waveform's position in time.

This is why the concept of a “phase switch” on a mixing console makes no sense to me. To control phase shift, you either need a dial where you can start at 0 and then adjust the amount of positive or negative phase shift that you want to apply, or a stepped control that has preset shift positions (e.g. 0, 90, 180, 270 etc.). You would also want the ability to choose which frequencies you shift. So again, from a practical mixing and mastering perspective, phase is more complex because you can shift the phase to various degrees at different frequencies but polarity is all or nothing. You can only flip (i.e. reverse) polarity.

But someone once told me that the phase switch flips the phase 180 degrees, though?

From a practical perspective, you can shift the phase 180 degrees forward or backward in time. You can also flip the polarity 180 degrees relative (not in time, but in positive or negative orientation). The “phase switch” on a mixing console actually flips the polarity 180 degrees. It does not change the waveform’s position in time relative to anything.

Having said this, from the mathematical viewpoint that I mentioned at the top of this article, polarity and phase are actually the same thing. Stay with me for the next few practical sections, and then I'll expand on the math.

When to use polarity in recording, mixing and mastering

You can use polarity throughout the recording, production, mixing and even mastering process to help isolate parts of the audio. You’ve likely heard of balanced cables. The concept behind a balanced cable is that both positive and negative polarity versions of the audio are sent down the cable. Both versions will pick up line noise along the way, and the noise knows no different so you end up with “positive polarity noise” applied to both the positive and negative polarity audio. At the end of the line, the negative polarity audio is flipped to positive (which flips the noise that was picked up to negative polarity). So when you combine the two signals you end up with a noise-cancelled version of the audio. Magic! Well, not really. It’s just polarity at work. You can apply this same concept to your recordings and mixes to isolate mid from side (more detail, here), or to isolate other portions of your audio.

You can be selective with polarity so that you are only using it to impact portions of the audio. You can use a variety of tools to do this, from EQ to gating, to compression. In fact, if you ever want to hear exactly how an effects processor is changing your audio, the easiest way to do that is to take an unprocessed version and a processed version, flip the polarity of the unprocessed version, and then mix the two together. You will end up with the difference between the two, allowing you to hear exactly how your original audio was changed.

Depending upon how you use polarity in your mix, you can create problems with mono compatibility. For example, if you have two identical wave forms panned hard left, and hard right and you flip the polarity of the second waveform, you’ll still hear them in stereo but in mono, you will end up with no audio because they cancel each other out. For this reason, it is a good idea to check your mix in mono as you work.

Polarity can help widen the stereo image, because it allows us to work in the domain of sum and difference (a.k.a. mid/side – more details here).

Polarity is sometimes used to help address comb filtering issues when working with multi-mic or multi-source multitrack recordings, but in my view it is a crude method. While flipping the polarity of a channel will change how the comb filtering effects sound, it offers you no control. You get one state or the other. It is worth a try because it is quick, but use phase shifting if you are looking for more precise control.

When to use phase in recording, mixing and mastering

Because we are dealing with time shifting or degrees of phase shift at certain frequencies, adjusting phase can do damage (smearing, comb filtering). So the question becomes, why do it?

Phase is often used to help correct tone issues caused by comb filtering when combining two captures of the same source, such as the left and right microphones used to capture a piano. Any instrument captured with more than one mic will have phase differences between the two microphones given that they are at different distances from the instrument. That will cause some degree of reinforcement and cancellation at certain frequencies when the signals are combined to mono. You may not be able to completely correct it, but by shifting the phase of a certain range of frequencies within one of the signals, you can “tune” things so that you end up with the most musical sounding result. There are tools available today that allow you to do this such as Waves InPhase.

Delay is another option commonly used to align each microphone feed of a drum track to compensate for timing differences due to mic position. This allows you to take advantage of phase in a simpler way (affecting all frequencies rather than only affecting a range of frequencies) than I described above. Just milliseconds of delay can make a real difference in how the drum kit sounds because of how the delay moves frequencies in time relative to the other tracks. Again, the movement in time changes the phase of each frequency relative to the waveforms in your mix to varying degrees (more about this down below).

Phase is also used to enhance stereo imaging and width. We have two ears, and so as with a multi-microphone recording setup, our ears pick up sound at different points in time. Our brains use those tiny time differences to locate sounds in the space around us. This is a whole separate field of study called “psychoacoustics”. Using phase, you can take advantage of how we perceive sound by adjusting or even exaggerating the time differences between the left and right channels in your mix.

As with polarity, phase shifting can create problems with mono compatibility so I recommend checking your mix in mono as you work, to make sure that the adjustments you make are not creating bigger problems.

What about using both polarity and phase in recording and mixing?

Absolutely. Phase shifting and polarity reversal are two tools that recording and mix engineers use regularly. They each have their purpose, and introduce benefits and risks.

A recording engineer uses phase to get a clear, mono-compatible stereo capture of an acoustic instrument, such as a piano. They will shift the mics here and there so that 1) they get the best tone, but 2) so that the two mics interact well together, in mono and stereo (think "phase relationships"). In some situations a recording engineer might choose to flip the polarity of a signal during capture because they know that the result will sound the most natural. This is common when mic’ing acoustic drums for example, where if you have mics on the top and bottom skins of the same drum and you want to make sure that when combining the two signals, they are reinforcing each other in a musical and natural way. When you hit the top skin of a drum, it presses into the drum whereas the bottom skin bulges out and from that perspective, the vibrations coming from below the drum are of opposing polarity. The tone is different too though, so it is best to listen with and without the polarity reversed, and with each mic at different gain levels before you commit to one mode or the other.

A mix engineer will use polarity and phase together for other reasons. They may want certain aspects of the mix to have a very wide or “beyond the loudspeakers” presentation, so they will use both of these tools to help get the sound they are envisioning. Granted, much of this is automatic these days! When you use a stereo widener plugin, for example, the tool is designed to do much of the thinking for you, giving you a “Width” knob and perhaps a few other control options, which allow you to focus more on the result you, are hearing, and ensuring that it isn’t creating other issues.

Ø: The Mathematics of Phase and Polarity

Mixing and mastering engineers usually differentiate phase and polarity as I have above, but audio hardware and software manufacturers often refer to polarity as "phase". You will often see the mathematical symbol for phase - Ø - on the polarity reversal button of a mixing console for example. As a recordist, mix engineer or mastering engineer, it is important to understand when you see a button or dial with the Ø symbol on it, what is that control actually doing to your audio - flipping its polarity or shifting frequencies in time - because those results will affect the sound of your mix in different ways.

At a mathematical level, there are more similarities between Phase and Polarity than differences, though. I would like to thank Mr. Evan Buswell of New Systems Instruments (https://nsinstruments.com/) for helping me clarify this article, and for providing this detailed explanation:

While polarity and phase are different from a practical (i.e. mixing and mastering) perspective, they are the same thing from a mathematical viewpoint. Mathematics defines phase and polarity as properties of the individual sine waves which make up the complex waveform, rather than properties of the complex waveform itself.

Any complex waveform is made up of several superimposed harmonics of the form A sin(ωt + φ), where A is the amplitude, ω is the frequency, t is time, and φ is phase. Since A sin(ωt + 180°) = -A sin(ωt), the identity between a 180° phase shift and a negative polarity should be clear. But how much delay is 180°? Phase is just another name for delay as a proportion of wavelength (the reciprocal of frequency). That is, d = φ / 360° × λ, where d is delay and λ is period of the wave. For example, to delay a sine wave at 200Hz by 180°, we delay it by 180° / 360° × 1 / 200Hz = 2.5ms. But now imagine that instead of a pure sine wave we have a complex waveform at 200Hz with a couple harmonics, at 400Hz and 600Hz. With a little simple algebra on the equation for delay, we can see that φ = 360° × d / λ. So when we delay *the whole waveform* by 180°, i.e. 2.5ms, we are changing the phase of its individual components differently. The 200Hz part is delayed by 180°, as we would expect; but the 400Hz component is delayed by 360°, not 180°; and the 600Hz component is delayed by 540°, which is 180° + 360°. In contrast, when we reverse the polarity of a complex waveform, the phase of every single frequency component is changed by 180°.

Complex waveforms are, well, complex. But knowing a couple mathematical principles about how simple sine waves with different phases interact with each other can help us understand what will happen when we delay or reverse the polarity of a complex waveform.

- Altering the phase of a sine wave won't change *anything* else about it. That is, altering the phase won't change the frequency or amplitude. Because we mostly hear frequency and amplitude, and not phase, the audible effect of a phase shift on a single sine wave ranges from very, very subtle to undetectable.

- When you mix two sine waves of the same frequency but different phases together, this results in a single sine wave of the same frequency but a different amplitude. Specifically, mixing together two sine waves gives you 2 A cos(φ/2) sin(ωt + φ/2), where A is the original amplitude of the two waves, and φ is the difference in phases between them. Here is where phase comes back into our hearing, by affecting the volume of certain frequencies in a mix.

Those two things are just about all you need to know about sine waves and phase. We can then extrapolate to complex waveforms:

- Changing the phase or polarity of a complex waveform alone won't affect how it sounds very much, if at all.

- But when mixing two complex waveforms together, whenever they share individual sine wave frequency elements, those elements will get louder or softer depending on their relative phase. Any sine wave frequency elements they don't share will pass through unaffected (which is why mixing works at all), and no new frequencies will be created.

So from a mathematical point of view, this is the difference between changing the phase and polarity of a complex waveform (A) relative to another (B) within a mix:

- When you reverse the polarity of complex waveform A, you change the phase of all its frequency elements equally, by 180°. This changes the phases of the individual frequencies in waveform A relative to B in the mix. For example, if the relative phases in A and B of the 200Hz and 400Hz elements were 170° and 10° respectively, the new relative phase of the 200Hz element will be 180° + 170° = 350°, and the new relative phase of the 400Hz element will be 180°+10° = 190°. As a result, the 200Hz element in the mix will get much louder, and the 400Hz element will get much softer.

- When you delay complex waveform A relative to B, you change the phase of each of its frequency elements by a different amount. This again changes the relative phases of the individual frequencies in the mix, but in a different way. If we take the example above but delay waveform A by enough to shift the 200Hz element by 180° instead of changing polarity, the new relative phase of the 200Hz element will again be 180° + 170° = 350°, but the new relative phase of the 400Hz element will be 360° + 10° = 370°. As a result, the 200Hz element in the mix will again get louder, but the 400Hz element will keep the same volume.

Evan and I have covered phase and polarity from both a practical and mathematical perspective so that you are armed with enough information to choose when and how you use itto shape the sound of your recordings and mixes.

Happy Mixing!